Signllm, bridging the Silence: AI-Powered Solutions for the Hearing Impaired

Technology has been a boon for many, but for those with hearing disabilities, it has often felt like a distant echo, and is making feats in the field of audiology. However, the advent of Artificial Intelligence (AI) is rewriting this narrative, promising a future where communication barriers for the hearing impaired are significantly reduced.

Intelligent devices and training modules in this domain are no longer mere electronic amplification tools; they have become sophisticated personal assistants and programs, adapting to individual needs and environments in real-time.

Lets checkout the advancements in AI-powered hearing and explores the potential of SignLLM, a language model specifically designed for Sign Language, in enhancing communication for the deaf and disabled hearing community.

AI: A New Language for the Deaf

Traditional hearing aids often struggled to adapt to the complexities of real-world sound environments. However, AI has changed the game. From speech-to-text transcriptions to real-time sign language interpretation, AI is becoming an indispensable tool in empowering the deaf community.

One of the most promising developments in this field is the creation of AI-powered language models specifically designed for sign languages. SignLLM, for instance, is a groundbreaking model that is being trained on vast datasets of sign language videos. This model has the potential to revolutionize how deaf individuals communicate and access information.

What is a Sign Language

A symbolic language in a visual form of communication using hand shapes, facial expressions, and body gestures is known as a Sign-language. It’s a complete language with its own grammar and syntax, just like spoken languages. Different countries have their own sign languages, but they share some common features. Sign languages are essential for deaf and hard-of-hearing people to interact and express themselves effectively.

SignLLM: A Game-Changer

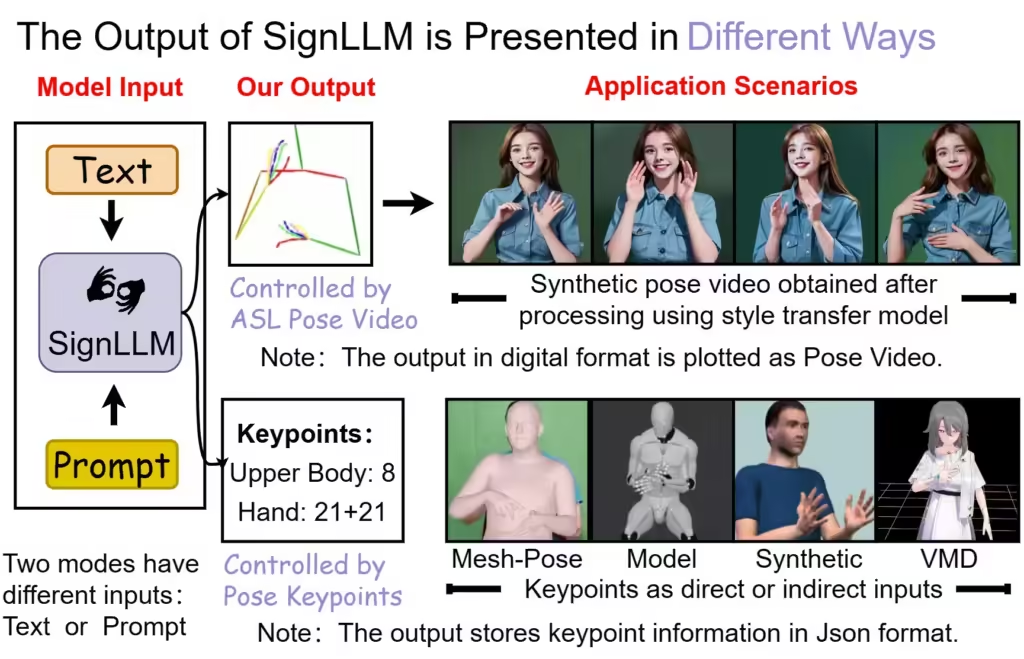

SignLLM, through its ability to understand and generate sign language, can be used in a multitude of ways. Imagine a world where deaf individuals can interact with computers and smartphones using sign language, where virtual assistants can understand and respond to signed queries, and where educational content is accessible in sign language with just a click.

Moreover, SignLLM can be a powerful tool for language preservation. Many sign languages are at risk of extinction due to a lack of documentation and resources. By creating AI models like SignLLM, we can help preserve these valuable linguistic and cultural heritage.

SignLLM : Sign Language production – LLM

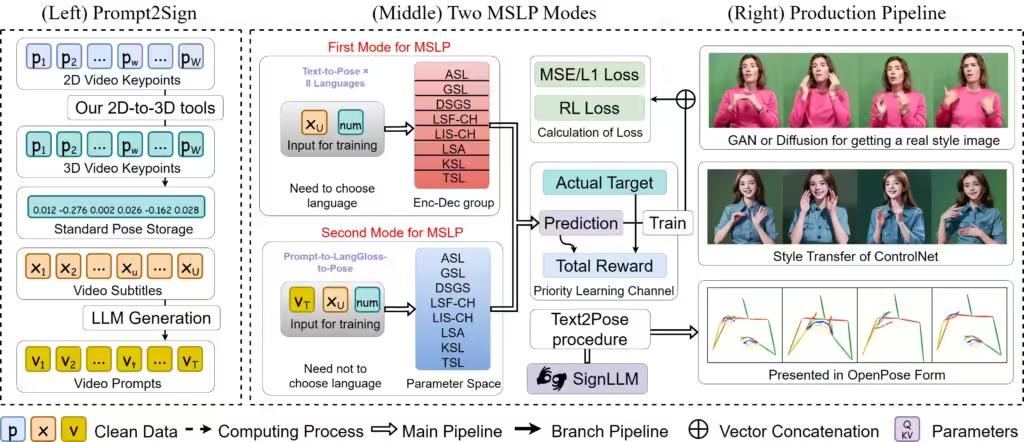

Its dataset transforms a vast array of videos into a streamlined, model-friendly format, optimized for training with translation models

like sequence to sequence and text to text.

SignLLM, the first multilingual Sign Language Production (SLP) model, which includes two novel multilingual SLP modes that allow for the generation of sign language gestures from input text or prompt. Both of the modes can use a new loss and a module based on reinforcement learning, which accelerates the training by enhancing the model’s capability to autonomously sample high-quality data.

![]()

What is a LLM

A Large Language Model (LLM) is an advanced AI designed to understand and generate human language. It is trained on vast amounts of text data, enabling it to perform a wide range of language-related tasks such as translation, summarization, and text generation. LLMs use deep learning techniques, particularly neural networks, to process and predict language patterns. These models can comprehend context, generate coherent text, and answer questions based on the input they receive. Notable examples include OpenAI’s ChatGPT or the Google’s Gemini.

Multi-Language Proficiency: SignLLM’s Potential

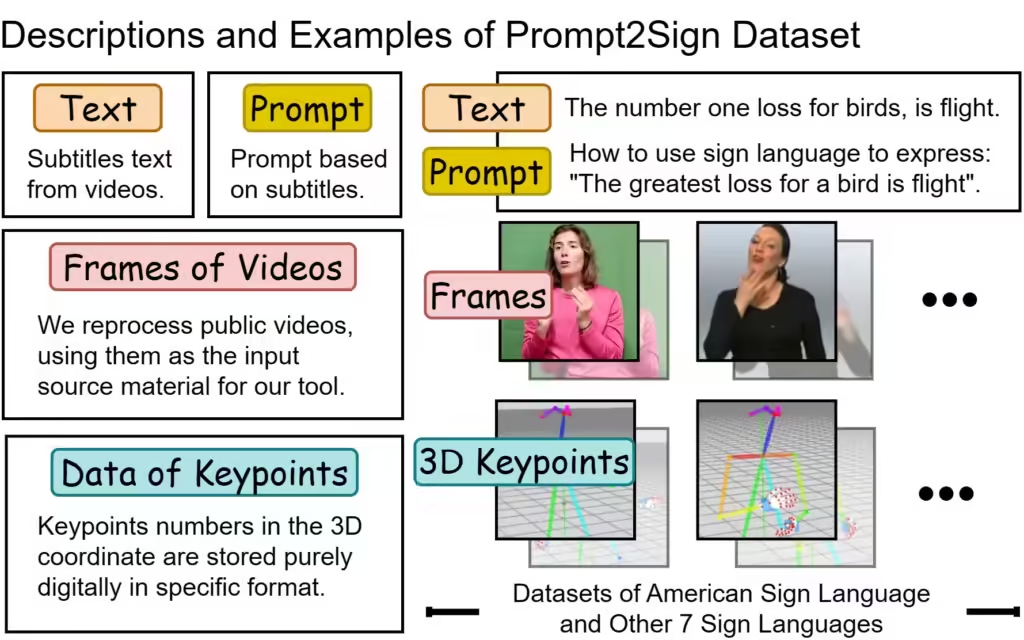

The ability of SignLLM to handle multilingual sign language creation with ease is one of its most impressive features. The model has shown state-of-the-art performance on SLP tasks in eight different sign languages by utilizing the breadth of the Prompt2Sign dataset.

The researchers have demonstrated SignLLM’s proficiency in eight languages, like American Sign Language Production (ASLP), among others, through thorough benchmarking. These empirical investigations shed light on the subtleties and difficulties involved in creating sign language.

Top: prompt2sign dataset input | Bottom: Multiple output [Courtesy: SignLLM]

Using a Text-to-Gloss framework, the main objective of Sign Language Production (SLP) is to generate human-like sign avatars. Deep learning-based SLP techniques require multiple phases. The text is first translated into a ‘sign gloss language’ with the necessary linguistic attributes to express motions and positions.

This allows the model to creation of a video, using the ‘sign gloss language’ while also capturing profound characteristics through the use of variables within the neural network architecture. The final video is further edited to produce more captivating avatar movies that resemble actual humans.

Prompt2Sign is a ground-breaking dataset that tracks the upper body movements of sign language demonstrators on a massive scale. Researchers from Rutgers University, Australian National University, Data61/CSIRO, Carnegie Mellon University, University of Texas at Dallas, and University of Central Florida present the dataset. Since this is the first comprehensive dataset including these 8 sign languages, it represents a significant advancement in the field of multilingual sign language production and recognition.

The SignLLM project’s researchers are consistent in their dedication to promoting innovation, encouraging teamwork, and enabling people with hearing disorders or impairments to interact and communicate with the world more successfully even as it develops and grows. There is a bright future ahead of AI augmented Sign Language Technology, and SignLLM is spearheading the movement for a more accessible and inclusive society.

ALSO READ FOLLOWING STORIES ON AI:

Alaya AI: Revolutionizing the incredible Future of Artificial Intelligence in 21st century

How does AI work and what can AI do

Skild AI: $300M Funding Round for Revolutionary feats in future Robotics

Will OpenAI Sora text-to-video model Revolutionize Video Creation in 21st century